Intelligent Document Chunking for PHP

I've been working on an idea for an open source Laravel application that lets you chat with your documents using local LLMs. However, building such an app requires a chunking strategy. Basically a process to break documents into semantic pieces that preserve meaning while fitting within an embedding model's context window.

Python developers have countless options for this, but the PHP ecosystem had nothing I could find as of the date of this post. Rather than spin up a Python service I decided to build a native PHP solution.

The result is Markdown Object, a package that intelligently chunks Markdown while preserving document structure and semantic relationships.

#Why Chunking Matters for Vector Search

If you've worked with vector databases and embeddings, you know that your chunking strategy directly impacts search quality. Throw an entire document into a single embedding and you lose specificity. Split it into arbitrary 500-character chunks and you destroy semantic meaning.

This is the problem Markdown Object attempts to solve. It converts your Markdown into a hierarchical object representation, then generates chunks that maintain their semantic context through breadcrumbs built from document headings.

#How It Works

The package builds on top of League CommonMark for parsing and Yethee\Tiktoken for accurate token counting. Here's a basic example:

1use League\CommonMark\Environment\Environment; 2use League\CommonMark\Parser\MarkdownParser; 3use League\CommonMark\Extension\CommonMark\CommonMarkCoreExtension; 4use BenBjurstrom\MarkdownObject\Build\MarkdownObjectBuilder; 5use BenBjurstrom\MarkdownObject\Tokenizer\TikTokenizer; 6 7// Parse your Markdown 8$env = new Environment(); 9$env->addExtension(new CommonMarkCoreExtension());10$parser = new MarkdownParser($env);11$doc = $parser->parse($markdown);12 13// Build the structured model14$builder = new MarkdownObjectBuilder();15$tokenizer = TikTokenizer::forModel('gpt-3.5-turbo');16$mdObj = $builder->build($doc, 'guide.md', $markdown, $tokenizer);17 18// Generate semantic chunks19$chunks = $mdObj->toMarkdownChunks(target: 512, hardCap: 1024);Each chunk that comes out includes not just the content, but also its location in the document hierarchy:

1foreach ($chunks as $chunk) {2 echo implode(' › ', $chunk->breadcrumb) . "\n";3 // Output: "guide.md › Getting Started › Installation"4 5 echo $chunk->markdown;6 // The actual Markdown content, ready for vectorization7}#The Art of Finding the Right Chunk Size

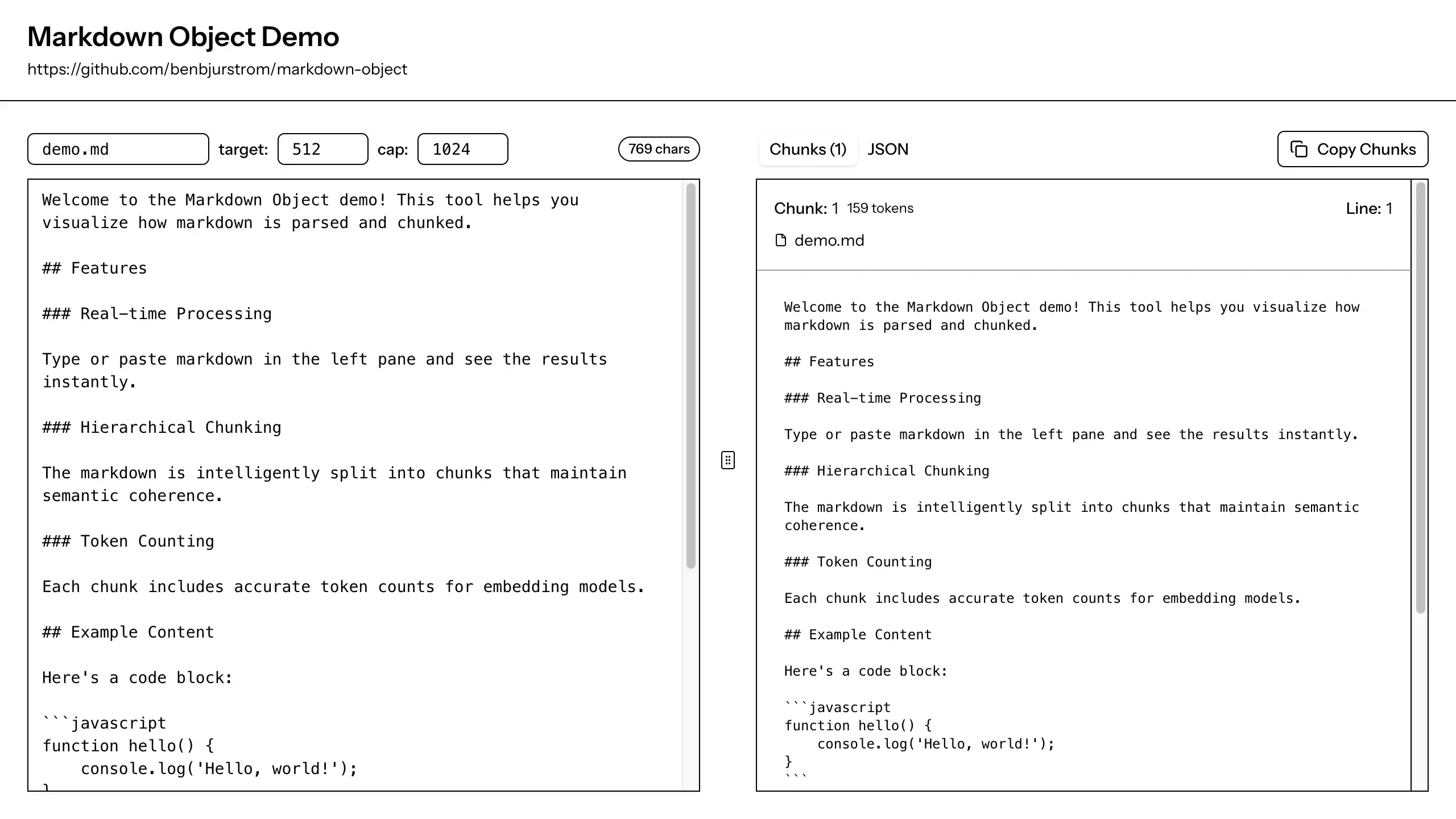

After building the core functionality, I quickly realized that effective chunking isn't just about the algorithm. It's about finding the right balance for your specific content. That's why I built a interactive demo application that lets you experiment with your own content in real-time:

The demo app lets you paste in your Markdown, adjust the target and hard cap parameters, and immediately see how your content gets chunked. You can see exactly where each chunk starts and ends, what breadcrumbs it carries, and how many tokens it contains.

#Hierarchical Greedy Packing

Under the hood, the package uses what I call "hierarchical greedy packing" to create chunks. The algorithm respects your document's natural structure:

- If the entire document fits within the hard cap, it returns as a single chunk

- When too large, it splits at the highest heading level first (H1, then H2, etc.)

- It greedily packs sibling sections that fit together within the token limit

- Large paragraphs, code blocks, and tables intelligently break at the target boundary

This approach ensures that related content stays together when possible, while still respecting token limits for your embedding model.

#Beyond RAG: The Object Representation

While I built this primarily for vectorization workflows, the intermediate object representation may prove useful for other tasks too. The structured model gives you programmatic access to your document's hierarchy, making it easy to extract specific sections, generate table of contents, or transform content in other ways.

You can even serialize the entire structure to JSON for caching or further processing:

1// Serialize to JSON2$json = $mdObj->toJson(JSON_PRETTY_PRINT);3 4// Deserialize later5$mdObj = MarkdownObject::fromJson($json);#Getting Started

If you're building a RAG application in PHP, you can install the package via Composer:

1composer require benbjurstrom/markdown-objectThe package is open source and available on GitHub. If you run into issues or have suggestions for improvements, feel free to open an issue or submit a pull request.

For the complete Laravel RAG stack, pair this with my pgvector driver for Laravel Scout. Together they provide a fully native PHP solution: Markdown Object handles the intelligent chunking, while the pgvector driver manages vector storage and similarity search with Scout's model observers keeping everything in sync automatically.